We have seen the demos on Internet. An “AI Agent” autonomously surfs the web, books a flight, and orders a pizza, all from a single-sentence command. It feels like the future—something straight out of a sci-fi film.

So you dive in. You grab a shiny framework like LangChain or CrewAI, stitch together a few LLMs, give them elaborate backstories (“You are a world-class market researcher with 20 years of experience in Fortune 500 strategy”), and connect them to a stack of APIs. For a moment, it feels like you’re conducting a symphony of intelligent bots—your own personal team of tireless virtual assistants.

And then… the symphony falls apart.

Real-world scenario #1: The hallucinating researcher

You’ve tasked your “researcher agent” to find market trends for your startup’s pitch deck. It confidently sends back a polished list of competitors—except one of them doesn’t actually exist. The agent invented an entire website, complete with a fake domain and pricing model. You only catch it when you try to visit the link during a live client meeting.

Real-world scenario #2: The sloppy data-entry assistant

Your “data-entry agent” is supposed to take scraped customer feedback and log it into your CRM. Instead, it forgets to format the JSON correctly—randomly mixing up date formats, breaking field mappings, and forcing you to manually fix hundreds of entries before the next campaign.

Real-world scenario #3: The infinite loop disaster

Your “coordinator agent” is meant to break down a large task into smaller steps and assign them to other agents. One day, it gets stuck in a loop—assigning and re-assigning the same subtask endlessly while burning through $50 of API credits in under an hour. By the time you notice, the logs are so noisy you can’t even debug where it went wrong.

This isn’t a hypothetical cautionary tale—it’s the day-to-day reality for countless developers, product managers, and startups seduced by the hype of autonomous agents. They look magical in demos, but in production they often prove brittle, opaque, and painfully hard to maintain. For most real-world use cases, they’re not just overkill—they’re a liability.

The good news? There’s a better way.

The solution isn’t to abandon AI altogether—it’s to design your workflows with the right level of complexity, reliability, and control. Instead of unleashing free-roaming agents into your production environment, you can adopt proven, simpler patterns that:

- Keep you in control of execution steps

- Are easy to debug when something goes wrong

- Work consistently without bleeding your API budget

In this guide, we’ll break down five powerful, reliable, and debuggable workflow patterns that will handle 90% of your needs—without the agent-induced headaches.

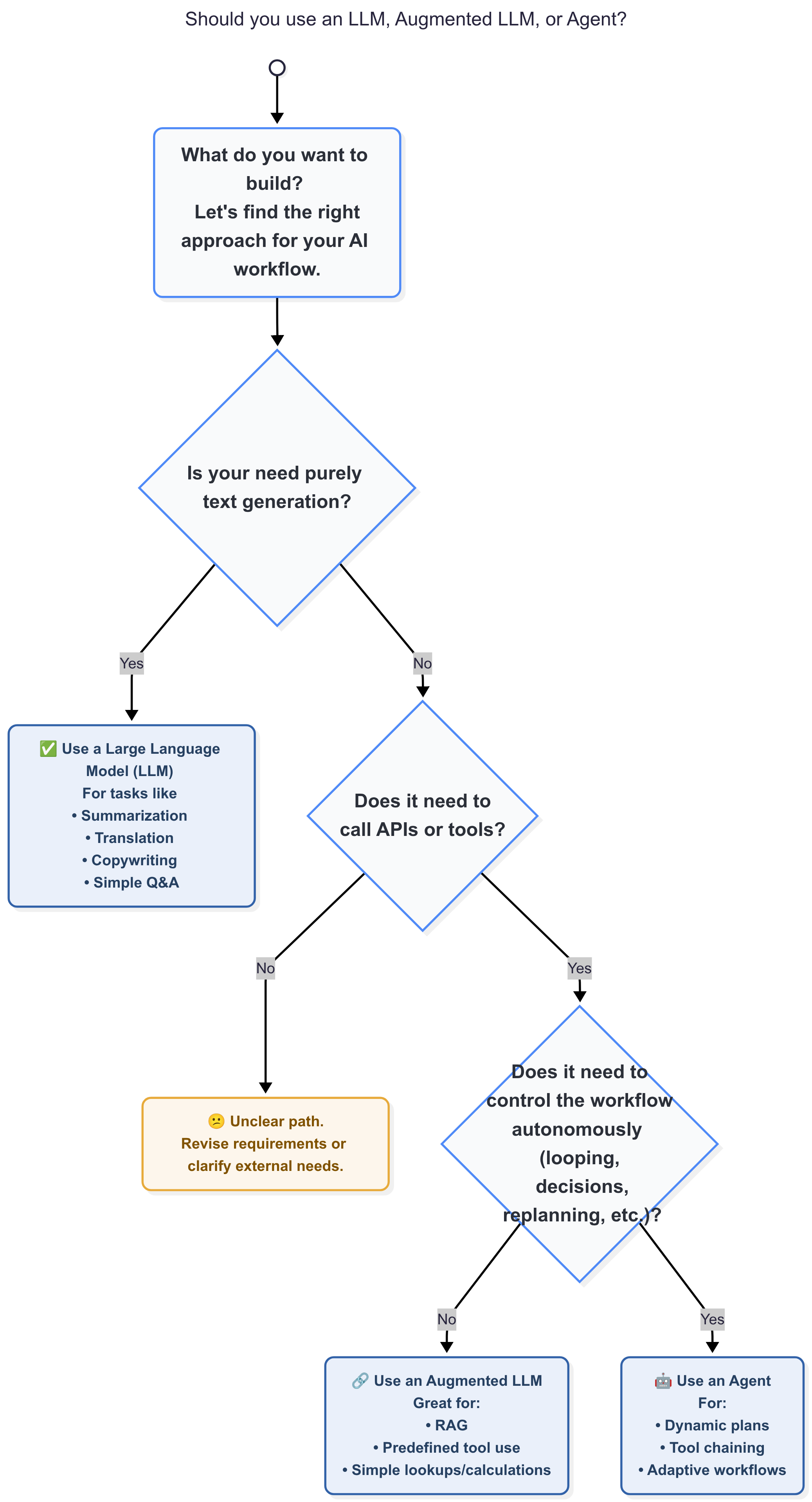

First, Your Sanity Check: The “Should I Use an Agent?” Flowchart

Before writing a single line of agentic code, walk through this quick reality check. This decision tree—built from hard-earned lessons in the trenches—can save you weeks of frustration.

Notice how tiny that “Use an Agent” box is? That’s not an accident. The road to a full-blown agent is narrow, risky, and often unnecessary. In most cases, a more structured, predictable approach will get you to the finish line faster. Let’s dig into those safer, saner options.

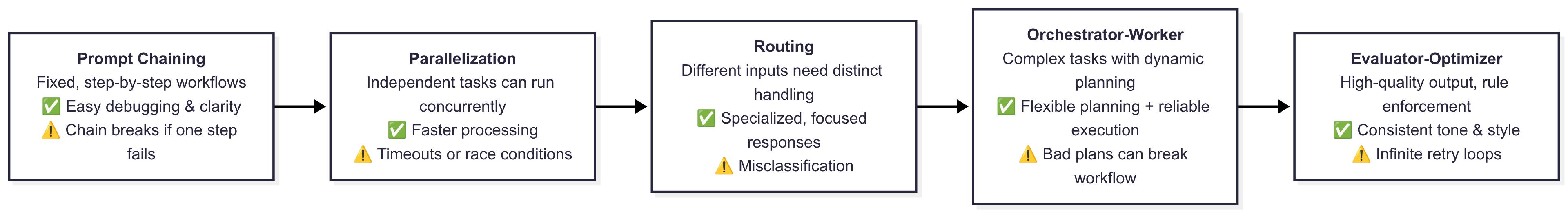

The Building Blocks: 5 Powerful LLM Workflow Patterns

When building AI-powered applications, structure is your best friend. These five workflow patterns provide clarity, predictability, and control—saving you from chaotic, brittle systems. Start with the simplest pattern that fits your need and only add complexity when absolutely necessary.

Pattern 1: Prompt Chaining (The Assembly Line)

What it is:

A series of simple, independent steps where the output of one becomes the input for the next—like an assembly line. This is great when you have a fixed, predictable workflow.

Real-World Example: Financial News Summarizer

Imagine you want to get daily summaries of financial news articles about a company.

- Step 1: Extract structured financial data from a raw news article (e.g., revenue, profit margin, stock change).

- Step 2: Identify key qualitative themes in the article (e.g., product launch, competition).

- Step 3: Generate a concise, human-readable summary using both structured data and themes.

Simple Code Snippet:

def run_financial_summary_chain(article_text):

# Step 1: Extract structured financial data

structured_data = extract_financials(article_text)

# Step 2: Identify key themes based on article and extracted data

themes = identify_key_themes(article_text, structured_data)

# Step 3: Generate the final summary

summary = generate_bullet_point_summary(structured_data, themes)

return summaryPattern 2: Parallelization (The Multi-Lane Highway)

What it is:

Run multiple independent tasks simultaneously to speed up processing, then combine the results.

Real-World Example: Travel Itinerary Builder

find_flights("Rome")

find_hotels("Rome", "3 days")

list_top_attractions("Rome")Pattern 3: Routing (The Smart Receptionist)

Use an LLM to classify the user input and route the request to the correct specialized handler or tool.

Pattern 4: Orchestrator-Worker (The Project Manager)

An LLM acts as an orchestrator that breaks a complex task into steps, but deterministic code workers execute each step.

Pattern 5: Evaluator-Optimizer (The Quality Control Loop)

One LLM generates content, another evaluates it against criteria. If it fails, feedback guides a revised generation. Loop until quality goals are met or max retries hit.

By starting simple and using these building blocks, you’ll avoid the pitfalls of fragile autonomous agents and build AI workflows that just work.

Okay, So When Should I Actually Use an Agent?

After exploring all these workflow patterns, you might wonder: Are agents even worth it? The answer is yes—but only for very specific, high-risk, high-reward scenarios.

5 Good Use Cases for Agents

- Data Science Assistant

Imagine an agent equipped with tools to run SQL queries, create data visualizations, and summarize key insights. A data scientist might give it a broad objective like: "Investigate why user churn increased last month."

- Creative Writing Partner

Picture a writer working with an AI agent to brainstorm headlines, suggest plot twists, or refine prose style. The agent generates ideas and drafts, but the human provides final judgment, creative direction, and nuance that AI cannot reliably produce alone.

- DevOps & Incident Response Agent

An on-call engineer is alerted to a performance degradation. They give a high-level command and the agent correlates observability data to suggest fixes.

- Contract Analysis & Risk Assessment Agent

An agent reviews long contracts and highlights deviations from a template so a lawyer can focus on critical issues.

- Recruitment & Sourcing Co-pilot

An agent pre-vets candidates and drafts outreach to speed the recruiter’s workflow.

When NOT to Use Agents (And What to Use Instead)

Enterprise Automation

Need to process invoices, update customer accounts, or manage inventory? These workflows demand stability and predictability. Use the Orchestrator-Worker pattern instead of free-roaming agents.

High-Stakes Decisions

For financial, medical, or legal decisions, favor deterministic, auditable workflows and well-tested business rules over probabilistic agents.

Final Takeaway: Build Systems, Not Just Agents

The allure of the all-powerful, autonomous agent is undeniable. The demos, the hype, the promise of a bot that can do everything—it’s compelling. But the reality of building useful, reliable AI applications lies in structure, control, and observability, not in chasing complexity for its own sake.

Your future self—and your users—will thank you.